Updates and whatnot #2

It’s been a bit too long since the last update (literally a year) and since the last post as well. Or well, this being the first post of the year is shocking to me in many ways.

But now that the spring semester has ended and the summer period has begun, I have a bit more free time on my hands (looking for an internship amidst COVID-19 is something else). I figured I’d write up this update to make note of some things to not forget related to a coursework project from this semester.

So in the Machine Learning class, the project we chose was based on CNNs, where basically we aimed to create a model that would classify ASL alphabets.

I would note that we could’ve made it more useful for ASL in general, such as by including frequently used gestures, but provided with the limitations we had, classifying 244x244 images into 29 classes was already tough.

I’m not going to go over everything we did in the project, but I think I’ll note down a number of things I had to deal with, especially related to Tensorflow versions, tf.Dataset and training.

Due to various reasons related to being a private repo and not being able to fork, I won’t post the link to the repo here

So throughout the project, I think two of us (in a group of 5) actually wrote code related to training and testing the model.

We both used Keras and Tensorflow, which was convenient, but I had mostly used version 2.0.0 via Miniconda up to that point, whereas the other member used the latest version at the time, which was 2.4.1 on pip.

Surprisingly, there were almost no major issues related to using different versions, except for one.

Initially, the script used to prepare the data used Keras’s ImageDataGenerator, which was a massively convenient built-in class for preparing image datasets, and the flow_from_directory function.

Unfortunately, since the loaded dataset was ordered, dataset shuffling was required, so shuffle=True in the settings for ImageDataGenerator.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

# just a snippet from source

image_data_generator = tf.keras.preprocessing.image.ImageDataGenerator(

rescale=1. / 255.,

validation_split=split,

zoom_range=[0.9, 1.2],

horizontal_flip=False,

width_shift_range=0.20,

height_shift_range=0.20,

rotation_range=10,

brightness_range=[0.9, 1.1])

training_generator = image_data_generator.flow_from_directory(

directory=path,

batch_size=32,

seed=42,

shuffle=True,

subset="training",

target_size=(64, 64))

Doing this, for some reason, made training really slow because it took about 40 minutes to shuffle the data and another 40 minutes to fit per epoch. This totaled to 80 minutes per epoch, which was unacceptable to me. After a couple of days of not being able to find a solution, despite it not taking as long on the other member’s setup, I rewrote the data preparation script for my own purposes. I yet again ran into another problem I should note.

This time, I manually loaded all the data into proper tf.Dataset “objects” and seemingly trained the model, to great results. Or so it seemed.

From what I could tell, during training, the validation accuracy reading it gave me was ridiculously good (like 85+% on the first epoch! and then 95+% by the third!!!), which I only minorly suspected because I created a model aiming to overfit the training data at first.

Then, when I excitedly ran model.evaluate(), the accuracy reading achieved was … below 1%. And it wasn’t just for the test sets. This happened on the validation and training sets as well.

Admittedly, I spent way too much time on debugging this problem, trying out solutions like adding dropout layers or batch normalization layers to regularize the dataset (I still thought it was overfitting tremendously).

The internet (and its various forums) didn’t help much either.

At this point, I was on Tensorflow version 2.3.0, to take advantage of the Keras preprocessing layers in keras.layers.experimental.preprocessing.

So I tried removing those layers, to no avail. Then I tried going back to using my tried and tested TF v2.0.0 (resulting in a third entire rewriting of code).

And it now worked.

For the record, this was the tensorflow-gpu module from the anaconda channel, not conda-forge that I typically use, which as of writing this post has just updated to TF v2.4.1 13 days ago.

Ultimately, I still couldn’t find out why that version refused to work, based on comments ranging from errors related batch accuracy calculations to memory sizes.

So, I suppose I’ll stick to TF v2.0.0 for the time being and manually prepare the data.

On a slightly different note, my teammate managed to achieve a 94% accuracy model, using like 4 convolutional layers (with filters from 64 to 512) and a softmax activation layer. Though I haven’t verified this with his model (remember some features he used in his training script weren’t available in 2.0.0), it is rather impressive, if not somewhat dubious. The confusion matrices were there and all though so I suppose it works fine. On the other hand, I tried training on a more complex model, complete with batch normalization layers and 2 more layers, only to achieve at best a 64% accuracy. I suppose it’s overfitted at some point. I think I will get back to figuring this out again, if only training didn’t take 4 hours long (for like 4 epochs).

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

# the function I ended up with for data preparation and generation

def get_train_dataset(path, augment=False, split=0.2):

img_height = 224

img_width = 224

image_size = (img_width, img_height)

batch_size = 32

# normalization = tf.keras.layers.experimental.preprocessing.Rescaling(1./255) <-- see, this would've worked on v2.3.0

# AUTOTUNE = tf.data.AUTOTUNE

data_dir = pathlib.Path(path)

image_count = len(list(data_dir.glob('*/*.jpg')))

print("Number of image samples: {}".format(image_count))

list_ds = tf.data.Dataset.list_files(str(data_dir/'*/*'), shuffle=False)

list_ds = list_ds.shuffle(image_count, reshuffle_each_iteration=False)

# get class names

class_names = np.array(sorted([item.name for item in data_dir.glob("*") if item.name != "LICENSE.txt"]))

print(class_names)

valid_size = int(image_count * split)

train_ds = list_ds.skip(valid_size)

valid_ds = list_ds.take(valid_size)

"""

Utility functions grabbed from tf docs

"""

def get_label(file_path):

# convert the path to a list of path components

parts = tf.strings.split(file_path, os.path.sep)

# The second to last is the class-directory

one_hot = parts[-2] == class_names

# Integer encode the label

return tf.argmax(tf.cast(one_hot, tf.int64))

def decode_img(img):

# convert the compressed string to a 3D uint8 tensor

img = tf.image.decode_jpeg(img, channels=3)

# resize the image to the desired size

return tf.image.resize(img, [img_height, img_width])

def process_path(file_path):

label = get_label(file_path)

# load the raw data from the file as a string

img = tf.io.read_file(file_path)

img = decode_img(img)

return img, label

def configure_for_performance(ds):

# ds = ds.cache()

ds = ds.shuffle(1000)

ds = ds.batch(batch_size)

ds = ds.prefetch(1)

return ds

train_ds = train_ds.map(process_path)

valid_ds = valid_ds.map(process_path)

# rescale = tf.keras.Sequential([

# tf.keras.layers.experimental.preprocessing.Rescaling(1./255)

# ])

def preprocess(x):

x = tf.numpy_function(lambda x: tf.keras.preprocessing.image.random_rotation(x, 15, row_axis=0, col_axis=1, channel_axis=2), [x], tf.float32)

x = tf.numpy_function(lambda x: tf.keras.preprocessing.image.random_zoom(x, (0.2, 0.2), row_axis=0, col_axis=1, channel_axis=2), [x], tf.float32)

x = tf.image.random_brightness(x, 0.1)

return tf.reshape(x, [img_width, img_height, 3])

train_ds = train_ds.map(lambda x, y: (x / 255., y))

if augment:

train_ds = train_ds.map(lambda x, y: (preprocess(x), y))

valid_ds = valid_ds.map(lambda x, y: (x / 255., y))

train_ds = configure_for_performance(train_ds)

valid_ds = configure_for_performance(valid_ds)

# if augment:

# data_augmentation = tf.keras.Sequential([

# # tf.keras.layers.experimental.preprocessing.RandomFlip("horizontal"),

# tf.keras.layers.experimental.preprocessing.RandomRotation(0.05),

# tf.keras.layers.experimental.preprocessing.RandomZoom(0.2, 0.2)

# ])

# train_ds = train_ds.map(lambda x, y: (data_augmentation(x, training=True), y))

return train_ds, valid_ds

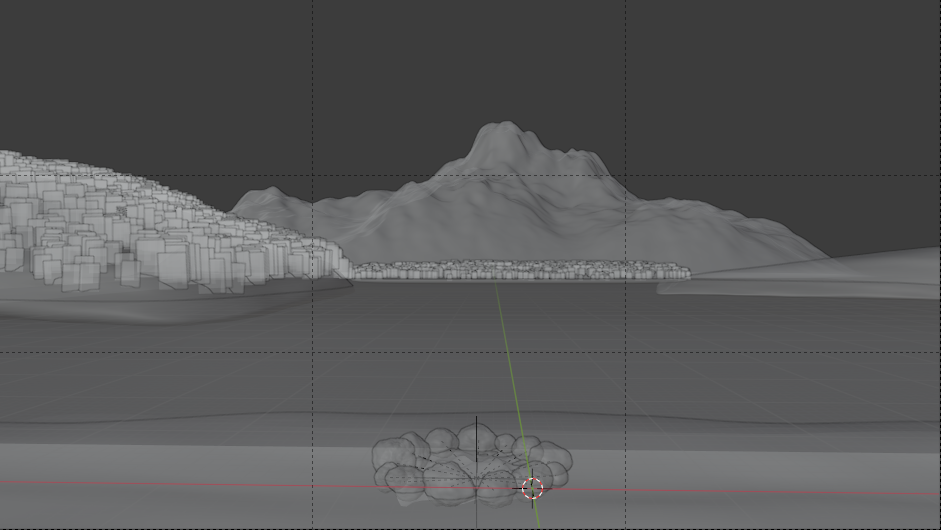

As a final point, I’m currently working on a new piece in Blender; it’s a large-scale environment, inspired initially from watching the scenery in Yuru Camp (anime that helps with being stuck indoors due to COVID-19) and limited by my amateurish skills.

I think I’ll write a post reflecting on its creation when I’m done because there are a couple of techniques I need to note down for future reference.

I’ll also link to my past Blender renders here on ArtStation, although looking back, some of them have serious lighting and color problems.

Well, like I said, amateurish.

Here’s a preview:

(I still have problems with texturing too!)